Why does Virtusize need computer vision?

Choosing the right size when shopping for clothes online is hard. At Virtusize, our main goal is to make that decision easy, and machine learning plays a big role in achieving that. One branch of machine learning is computer vision, which among other things includes image recognition, and is a particularly useful tool in fashion tech. Even a task as simple as scraping the product type, whether an item is a pair of pants or a skirt for example, could be tricky on some product pages. In those cases, we need image recognition to know what the product type is. Another important use case is extracting product measurements from pdf tables using optical character recognition(OCR). Furthermore, image recognition is the only method that can be used at scale to predict the style or fit of a product, because product descriptions usually don’t have that type of information. Is this sweater a cardigan or a pullover? Is this skirt tight or flared? Questions like these can be answered after training models on thousands of images to tell the difference between various categories.

What is image recognition?

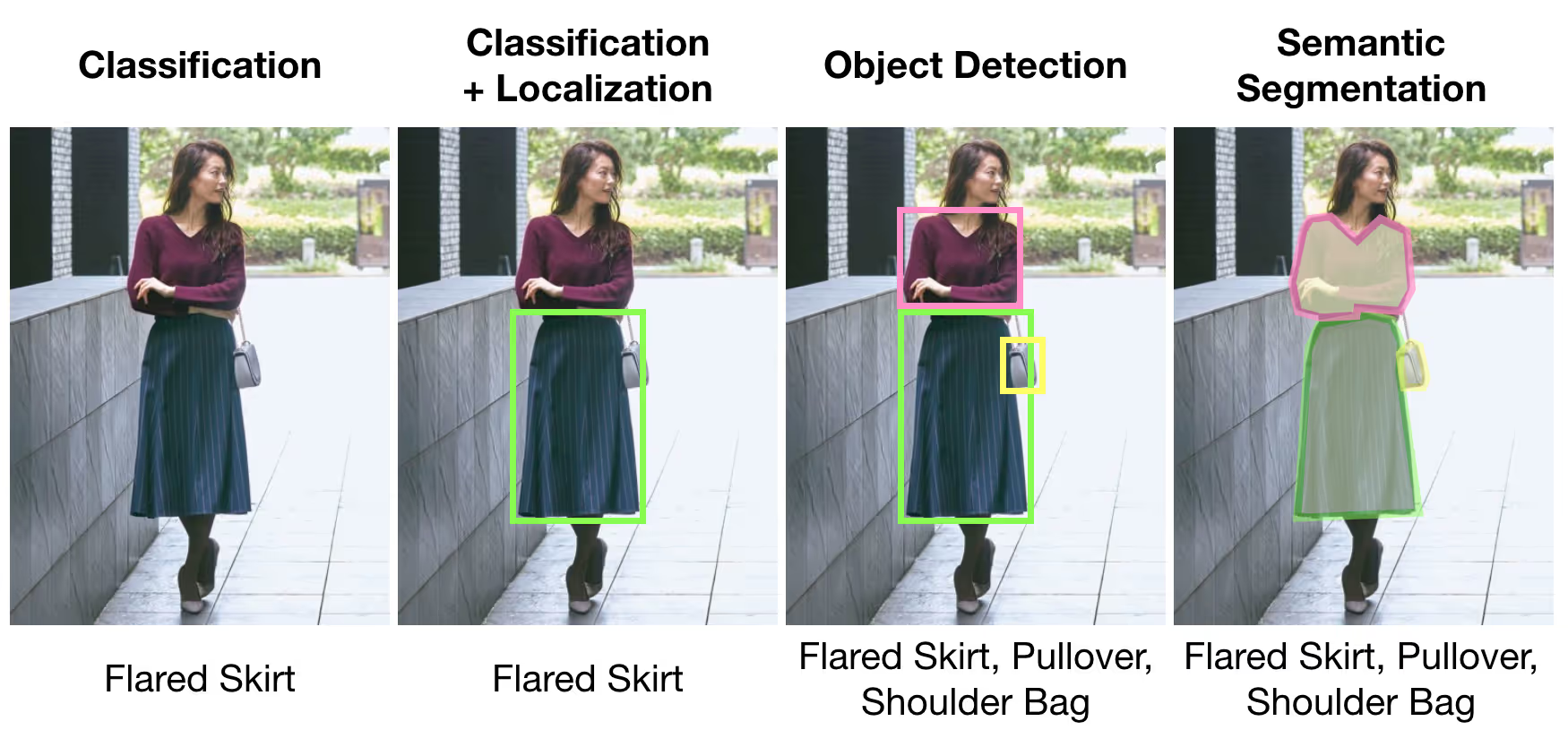

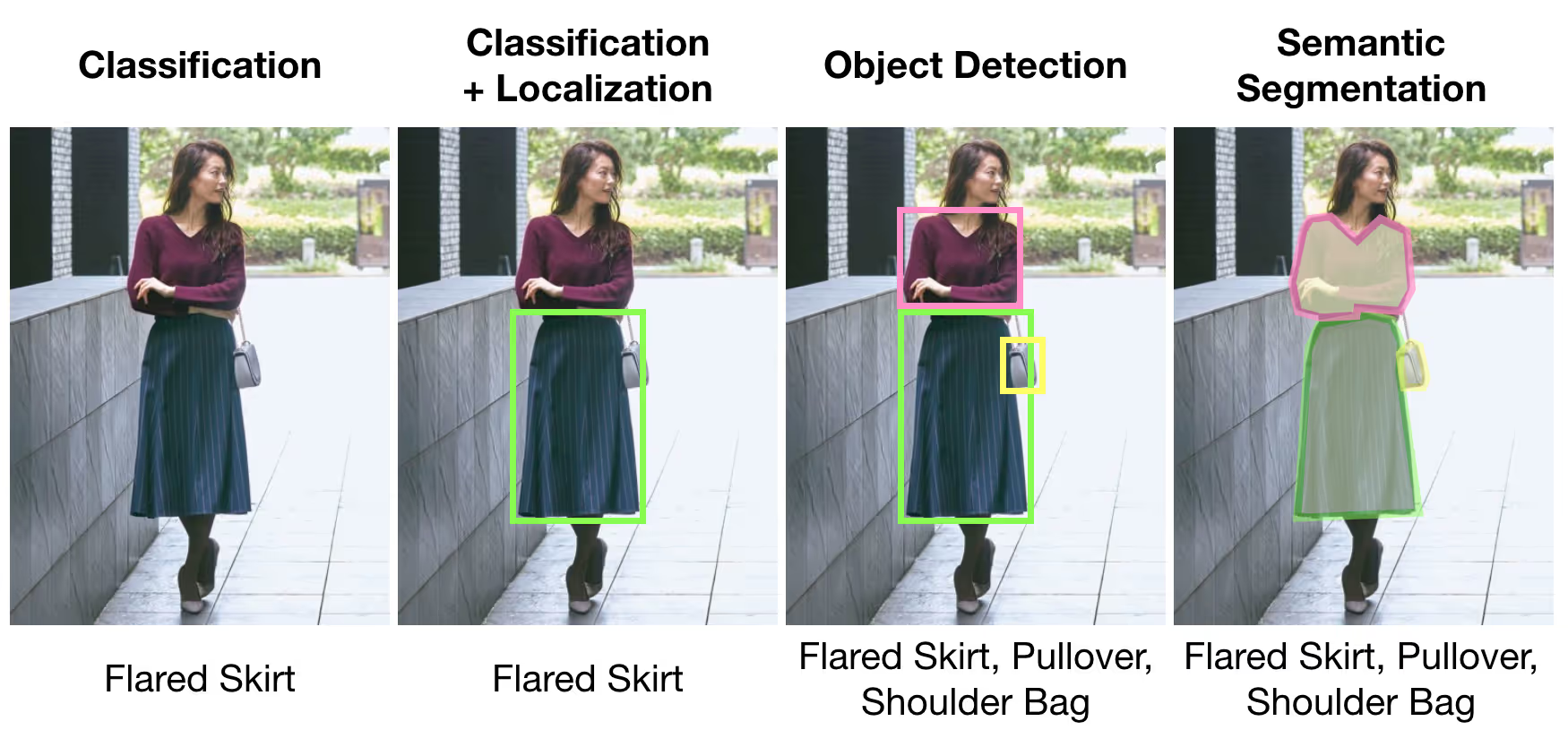

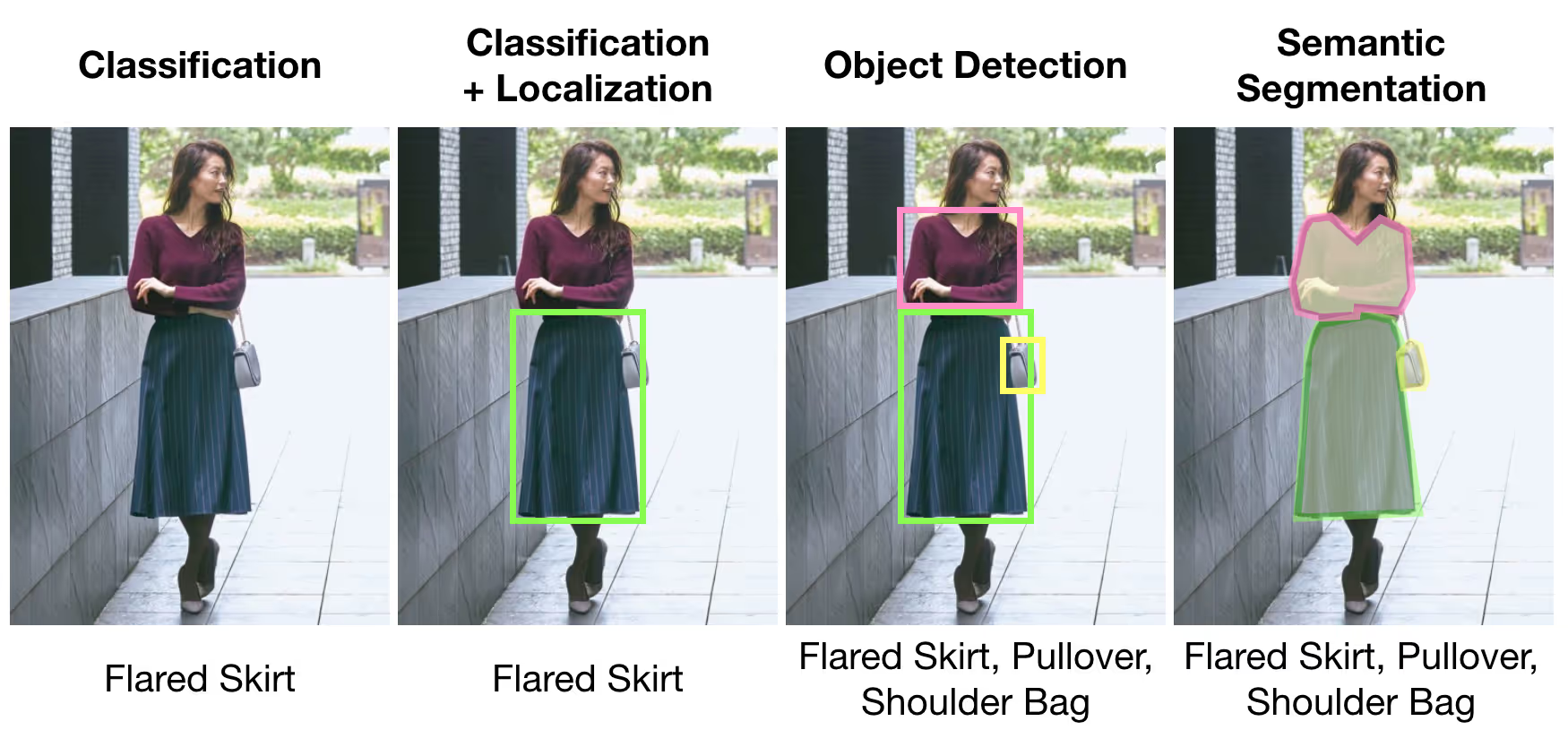

There are several types of image recognition as seen in Figure 1. The simplest one is classification. That’s when a model assigns a single label to an image. If a model is trained to tell the difference between tight and flared skirts, then after receiving an image it will output “flared”, for example. A classification and localization model will draw a bounding box around the item of interest before classifying it. This could be useful for images with particularly busy backgrounds. If multiple objects need to be

recognized, then object detection is the best choice. Every object in the list of possible categories will get a bounding box and a label. For tasks that require knowing the shape of an object, semantic or instance segmentation is needed. Semantic segmentation recognizes each category as a unit while instance segmentation gives a separate shape to each object in the class.

What is transfer learning?

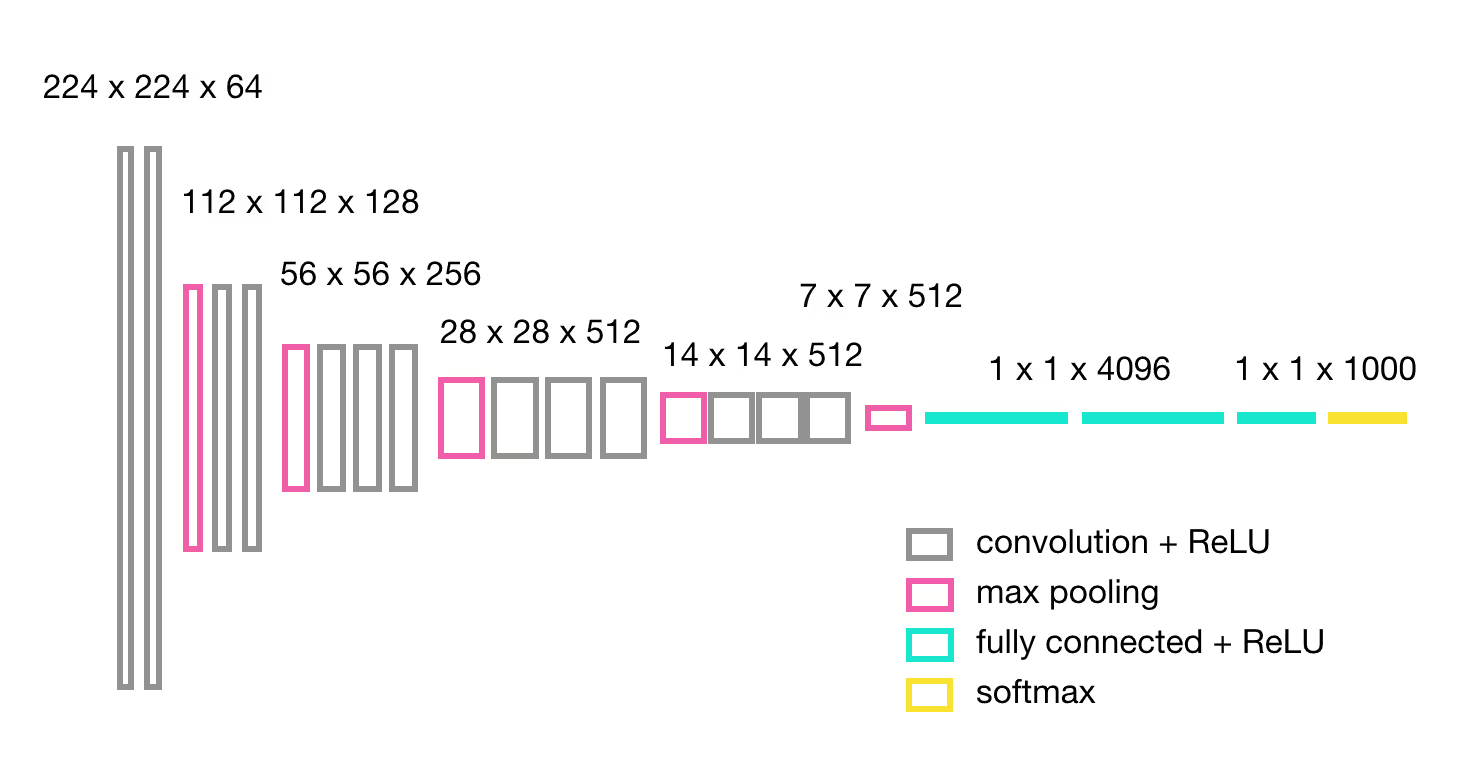

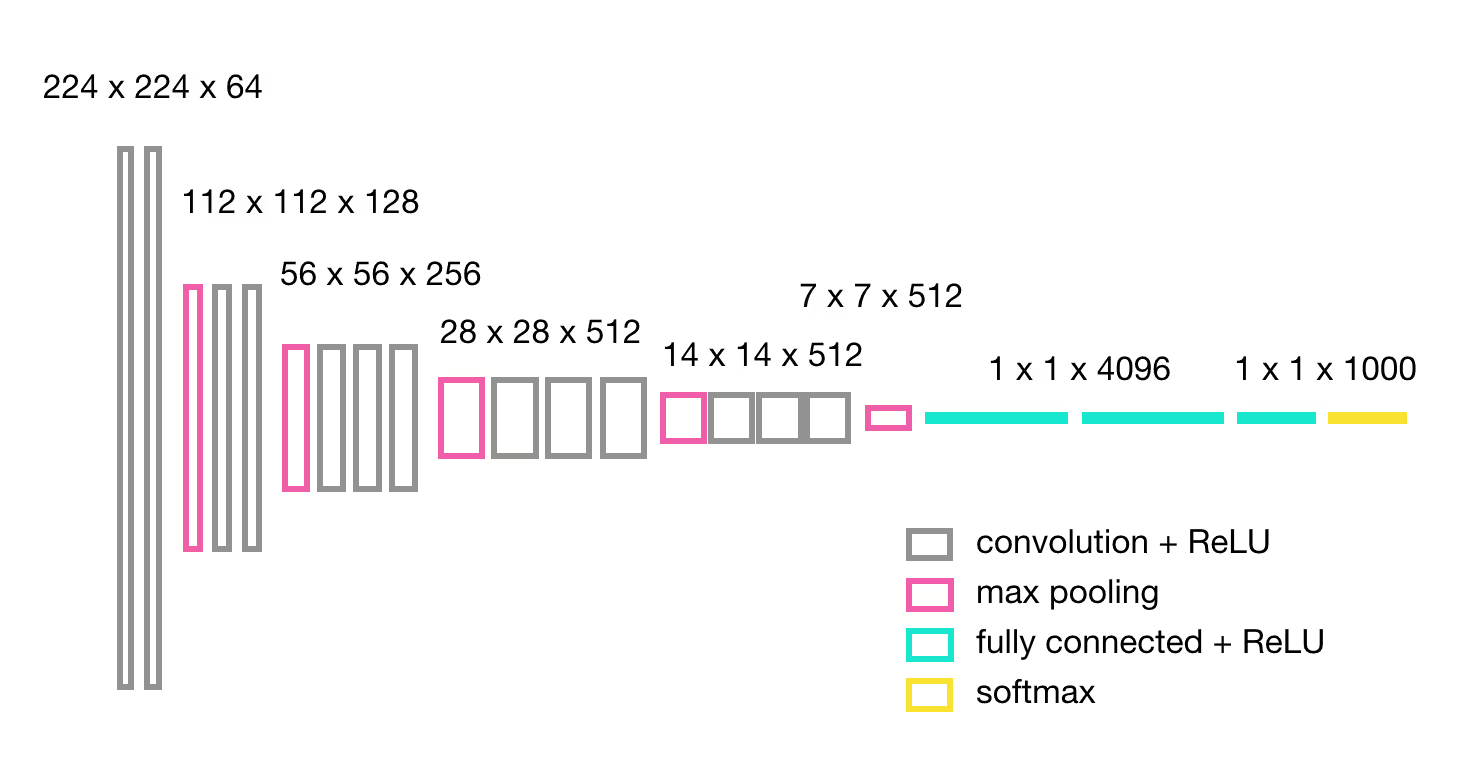

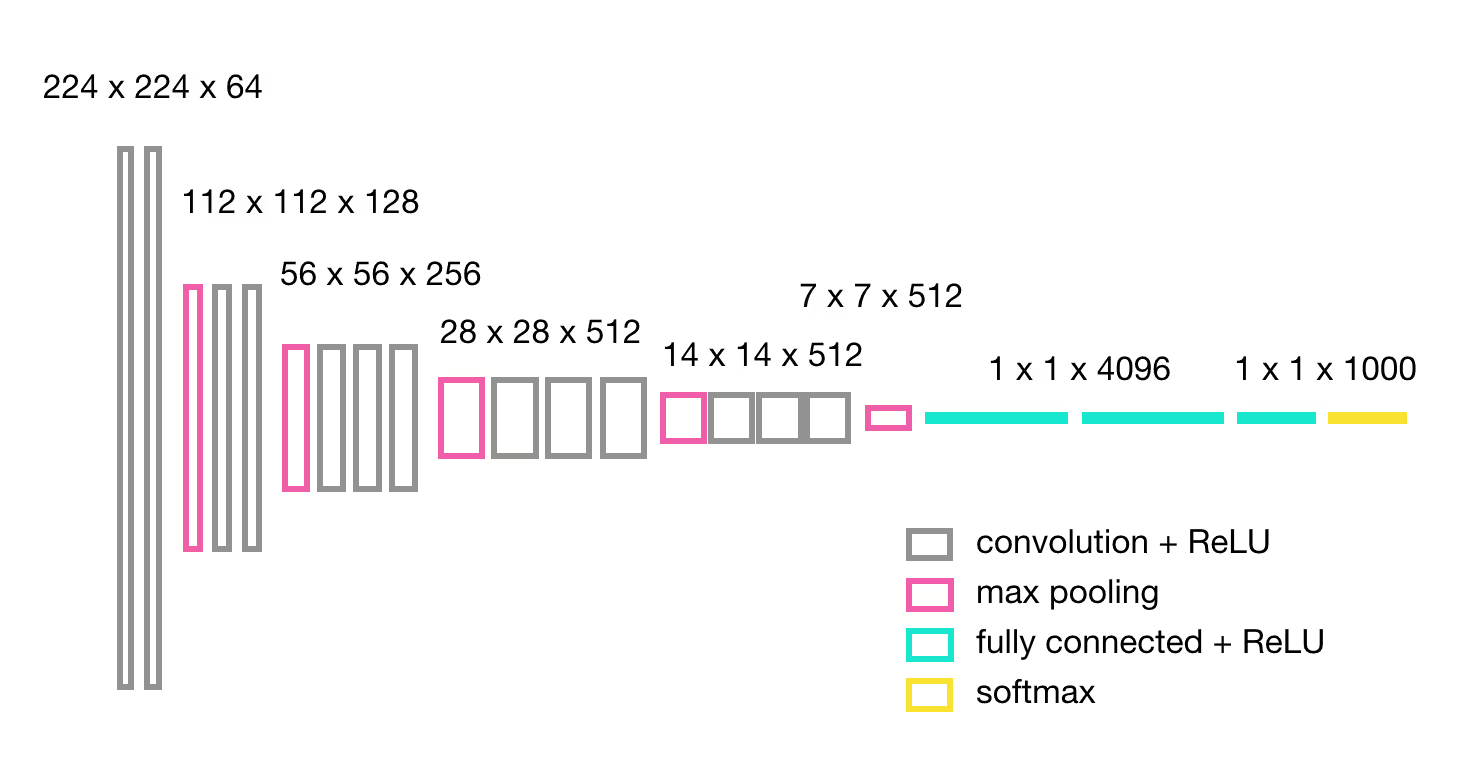

Image classification models require a lot of images and a lot of time to train them. For example, a well-known convolutional neural network model proposed in 2014 calledVGG16 [1] took more than two weeks to train on 14 million images belonging to one thousand categories. If you don’t have thousands of images for each category and lack access to GPUs (computing power), transfer learning is a good solution. As an example, let’s look at VGG16’s architecture shown in Figure 2. For a model that predicts flared vs. tight skirts, the simplest thing to do would be to replace the very last layer that outputs a one thousand dimensional vector into a layer that outputs a two dimensional vector. Then only that last layer needs to be retrained while keeping the weights of all the other layers fixed.If that doesn’t give accurate results, the weights from the first couple of layers can be fixed while the other layers are retrained. This way a model keeps what it learned from millions of images about recognizing basic shapes and textures while still identifying the specific characteristics of your images. If one architecture doesn’t achieve desired accuracy, one can always try others. There are many pretrained models these days:Inception, ResNet, MobileNet, DenseNet, etc.

How does image recognition improve our product?

Below are a couple of examples of how computer vision helps us improve user experience for Virtusize users. An online store will often combine all kinds of jackets into one category. A puffy winter jacket will be under the same category as a thin leatherjacket, for example. Recommending a size becomes tricky if we don’t know whether several other garments are meant to be worn underneath. Having an image recognition model label a jacket as “protective” lets us know that an item should be somewhat loose since it will be worn over shirts or sweaters.This idea of intended fit applies to any kind of garment. A sweater could be very tight or loose depending on the style as shown in Figure 4. The only way to know the intended fit of an item is to look at its image, and the only way to do it at scale is with computer vision. Knowing that a shirt is supposed to be tight prevents us from recommending a size that is too large. These are just a few of the several applications of computer vision at Virtusize. Hopefully this post provides a good introduction to image recognition and its potential in fashion tech.

References

[1] Karen Simonyan, Andrew Zisserman, Very Deep Convolutional Networks ForLarge-Scale Image Recognition. https://arxiv.org/pdf/1409.1556.pdf

Why does Virtusize need computer vision?

Choosing the right size when shopping for clothes online is hard. At Virtusize, our main goal is to make that decision easy, and machine learning plays a big role in achieving that. One branch of machine learning is computer vision, which among other things includes image recognition, and is a particularly useful tool in fashion tech. Even a task as simple as scraping the product type, whether an item is a pair of pants or a skirt for example, could be tricky on some product pages. In those cases, we need image recognition to know what the product type is. Another important use case is extracting product measurements from pdf tables using optical character recognition(OCR). Furthermore, image recognition is the only method that can be used at scale to predict the style or fit of a product, because product descriptions usually don’t have that type of information. Is this sweater a cardigan or a pullover? Is this skirt tight or flared? Questions like these can be answered after training models on thousands of images to tell the difference between various categories.

What is image recognition?

There are several types of image recognition as seen in Figure 1. The simplest one is classification. That’s when a model assigns a single label to an image. If a model is trained to tell the difference between tight and flared skirts, then after receiving an image it will output “flared”, for example. A classification and localization model will draw a bounding box around the item of interest before classifying it. This could be useful for images with particularly busy backgrounds. If multiple objects need to be

recognized, then object detection is the best choice. Every object in the list of possible categories will get a bounding box and a label. For tasks that require knowing the shape of an object, semantic or instance segmentation is needed. Semantic segmentation recognizes each category as a unit while instance segmentation gives a separate shape to each object in the class.

What is transfer learning?

Image classification models require a lot of images and a lot of time to train them. For example, a well-known convolutional neural network model proposed in 2014 calledVGG16 [1] took more than two weeks to train on 14 million images belonging to one thousand categories. If you don’t have thousands of images for each category and lack access to GPUs (computing power), transfer learning is a good solution. As an example, let’s look at VGG16’s architecture shown in Figure 2. For a model that predicts flared vs. tight skirts, the simplest thing to do would be to replace the very last layer that outputs a one thousand dimensional vector into a layer that outputs a two dimensional vector. Then only that last layer needs to be retrained while keeping the weights of all the other layers fixed.If that doesn’t give accurate results, the weights from the first couple of layers can be fixed while the other layers are retrained. This way a model keeps what it learned from millions of images about recognizing basic shapes and textures while still identifying the specific characteristics of your images. If one architecture doesn’t achieve desired accuracy, one can always try others. There are many pretrained models these days:Inception, ResNet, MobileNet, DenseNet, etc.

How does image recognition improve our product?

Below are a couple of examples of how computer vision helps us improve user experience for Virtusize users. An online store will often combine all kinds of jackets into one category. A puffy winter jacket will be under the same category as a thin leatherjacket, for example. Recommending a size becomes tricky if we don’t know whether several other garments are meant to be worn underneath. Having an image recognition model label a jacket as “protective” lets us know that an item should be somewhat loose since it will be worn over shirts or sweaters.This idea of intended fit applies to any kind of garment. A sweater could be very tight or loose depending on the style as shown in Figure 4. The only way to know the intended fit of an item is to look at its image, and the only way to do it at scale is with computer vision. Knowing that a shirt is supposed to be tight prevents us from recommending a size that is too large. These are just a few of the several applications of computer vision at Virtusize. Hopefully this post provides a good introduction to image recognition and its potential in fashion tech.

References

[1] Karen Simonyan, Andrew Zisserman, Very Deep Convolutional Networks ForLarge-Scale Image Recognition. https://arxiv.org/pdf/1409.1556.pdf

Why does Virtusize need computer vision?

Choosing the right size when shopping for clothes online is hard. At Virtusize, our main goal is to make that decision easy, and machine learning plays a big role in achieving that. One branch of machine learning is computer vision, which among other things includes image recognition, and is a particularly useful tool in fashion tech. Even a task as simple as scraping the product type, whether an item is a pair of pants or a skirt for example, could be tricky on some product pages. In those cases, we need image recognition to know what the product type is. Another important use case is extracting product measurements from pdf tables using optical character recognition(OCR). Furthermore, image recognition is the only method that can be used at scale to predict the style or fit of a product, because product descriptions usually don’t have that type of information. Is this sweater a cardigan or a pullover? Is this skirt tight or flared? Questions like these can be answered after training models on thousands of images to tell the difference between various categories.

What is image recognition?

There are several types of image recognition as seen in Figure 1. The simplest one is classification. That’s when a model assigns a single label to an image. If a model is trained to tell the difference between tight and flared skirts, then after receiving an image it will output “flared”, for example. A classification and localization model will draw a bounding box around the item of interest before classifying it. This could be useful for images with particularly busy backgrounds. If multiple objects need to be

recognized, then object detection is the best choice. Every object in the list of possible categories will get a bounding box and a label. For tasks that require knowing the shape of an object, semantic or instance segmentation is needed. Semantic segmentation recognizes each category as a unit while instance segmentation gives a separate shape to each object in the class.

What is transfer learning?

Image classification models require a lot of images and a lot of time to train them. For example, a well-known convolutional neural network model proposed in 2014 calledVGG16 [1] took more than two weeks to train on 14 million images belonging to one thousand categories. If you don’t have thousands of images for each category and lack access to GPUs (computing power), transfer learning is a good solution. As an example, let’s look at VGG16’s architecture shown in Figure 2. For a model that predicts flared vs. tight skirts, the simplest thing to do would be to replace the very last layer that outputs a one thousand dimensional vector into a layer that outputs a two dimensional vector. Then only that last layer needs to be retrained while keeping the weights of all the other layers fixed.If that doesn’t give accurate results, the weights from the first couple of layers can be fixed while the other layers are retrained. This way a model keeps what it learned from millions of images about recognizing basic shapes and textures while still identifying the specific characteristics of your images. If one architecture doesn’t achieve desired accuracy, one can always try others. There are many pretrained models these days:Inception, ResNet, MobileNet, DenseNet, etc.

How does image recognition improve our product?

Below are a couple of examples of how computer vision helps us improve user experience for Virtusize users. An online store will often combine all kinds of jackets into one category. A puffy winter jacket will be under the same category as a thin leatherjacket, for example. Recommending a size becomes tricky if we don’t know whether several other garments are meant to be worn underneath. Having an image recognition model label a jacket as “protective” lets us know that an item should be somewhat loose since it will be worn over shirts or sweaters.This idea of intended fit applies to any kind of garment. A sweater could be very tight or loose depending on the style as shown in Figure 4. The only way to know the intended fit of an item is to look at its image, and the only way to do it at scale is with computer vision. Knowing that a shirt is supposed to be tight prevents us from recommending a size that is too large. These are just a few of the several applications of computer vision at Virtusize. Hopefully this post provides a good introduction to image recognition and its potential in fashion tech.

References

[1] Karen Simonyan, Andrew Zisserman, Very Deep Convolutional Networks ForLarge-Scale Image Recognition. https://arxiv.org/pdf/1409.1556.pdf

.svg)

.png)

.avif)

.avif)

.avif)

.png)